Read Time:

7

Minutes

Innovation @ Ntegra

November 13, 2025

USRT 2018 – The Future Of AI – Jim Spohrer

A summary of our keynote speech from IBM’s Jim Spohrer, given at our USRT2018 panel day at the Stanford Park Hotel, Palo Alto, May 23rd, 2018

Measuring Progress and Preparing

As part of this year’s annual Greenside US Research Tour, Ntegra hosted a full day programme of presentations and a VC panel at the Stanford Park Hotel in Silicon Valley. The schedule started with a thought-provoking keynote presentation from Jim Spohrer, IBM Director of Cognitive Open Technology based at Almaden, San Jose, one of IBM’s worldwide research labs. The centre opened in 1986 and continues the research started in San Jose more than fifty years ago. The phrase “Silicon Valley” was first seen in print around 1970 but the origins and heritage of the region stretch back to when Stanford University, NASA Ames and IBM Research in San Jose were doing pioneering work on silicon-based transistors, hard disks, and other foundations of computing.

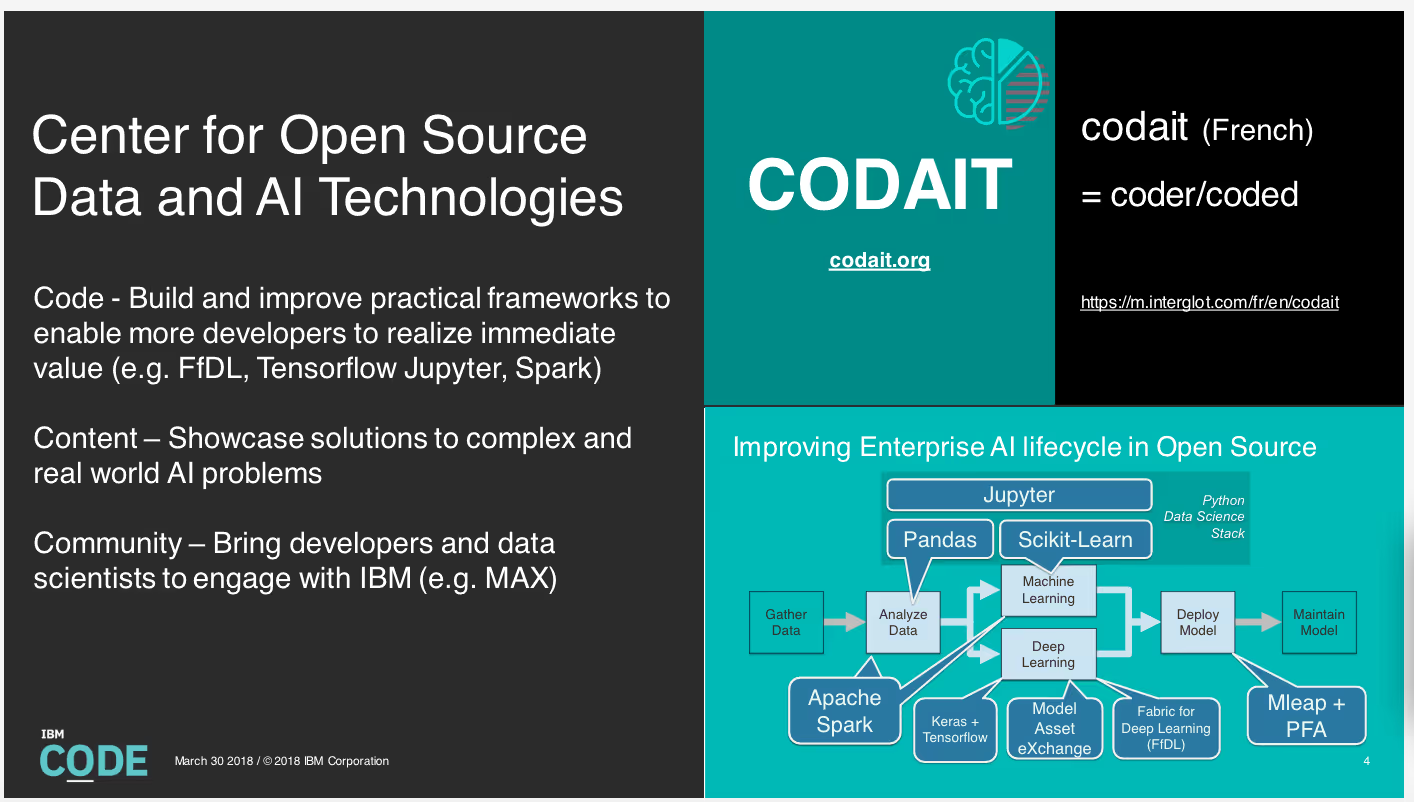

As well as being Director of IBM Global University Programmes Worldwide, Jim has been the Director of Almaden Services Research and was the Chief Technology Officer for IBM Venture Capital Relations. During the last few years Jim has been campaigning internally to get IBM to embrace open source in AI, latterly with great success. Half of his team are at IBM Watson West in San Francisco, working to transform IBM into a cognitive enterprise (see: Center for Opensource Data and AI Technologies (CODAIT)).

With a technical background, Jim likes to pursue collaborative research with academia. IBM now has 15 global research laboratories with around 3000 researches, IBM invests billions in R&D, paid for in part by significant patent licensing – IBM has been #1 in the world for over 27 years in the production of patents, more than any other company. When asked about IBM’s future, Jim was upbeat – suggesting the future is bright for companies transforming to use AI.

Jim says that “AI is hard,” and far from being solved. Google uses AI in nearly all their offerings (and they open-source key tools like TensorFlow). Facebook, Amazon and Microsoft are all pushing in the same direction with varying degrees of success. IBM is considered most mature in the B2B space.

Artificial Intelligence is popular again. However, pattern recognition does not equal AI. Deep learning only works if you have lots of data and compute power. We finally have lots of data and compute power so deep learning for pattern recognition is working well. However, AI is more than deep learning for pattern recognition. AI requires common sense reasoning, which will take another 5-10 years of research to deliver. How do we know this? :

- Look at the AI leader-boards: https://www.kaggle.com

- Read ‘The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World’ by Pedro Domingos.

- Look at the free online cognitive classes at https://cognitiveclass.ai

- Jim suggests that we should pose and address the following questions:

- What is the timeline for solving Artificial Intelligence and Intelligence Augmentation (IA)?

- Who are the leaders driving AI progress?

- What will the biggest benefits from AI be?

- What are the biggest risks associated with AI, and are they real?

- What are the implications for stakeholders?

- How should we prepare to get the benefits and avoid the risks?

Deep Learning for AI Pattern Recognition depends on massive amounts of ‘labelled data’ and computing power (available since ~2012). Labelled data is simply input and output pairs (such as a sound and word, image and word, English sentence and French sentence, or road scene and car control settings). Labelled data means having both input and output data in massive quantities. For example, 100,000 images of skin, half with skin cancer and half without, are needed to learn to recognise the presence of skin cancer.

Thanks to Moore’s Law, every 20 years compute costs are down by 1000x. This coupled with ML developments will stimulate and enable the growth of personal and digital assistants which will become commercially viable from about 2020 and in widespread use within 20 years. Some vertical applications may become mainstream much earlier than expected. For example, we are already seeing growth in voice enabled devices using Siri, Alexa and ‘Hey Google’ capabilities.

AI Leaders

Jim said, “Watching progress on open AI leader boards is like gazing into a crystal ball”

Who is winning? See: https://www.technologyreview.com/s/608112/who-is-winning-the-ai-race

Benefits & Implications

AI will undoubtedly facilitate easier access to expertise & better choices. More specifically:

- “Insanely great” labour productivity for trusted service providers

- Digital workers for healthcare, education, finance, etc.

- “Insanely great” collaborations with others on what matters most

- AI for IA = Augmented Intelligence and higher value co-creation interactions

The risks of AI that get the most headlines are job loss & the emergence of super-intelligence, but Jim does not worry about these given the positive benefits for business and entrepreneurship. Shorter term risks are more realistic, and include de-skilling of the work force and lower cost of certain attacks, for example spear fishing, allowing bad actors to automate tasks that were previously labour intensive. Ntegra Greenside’s Jonathan Ellard has recently written a philosophical post that discusses some of these points on his new personal blog.

To fully realise the reality of AI and make best use of it all stakeholders need to be involved. “The best way to predict the future is to inspire the next generation of students to build it better”. It is essential that we consider everyone a stakeholder in AI due to its revolutionary nature. This engagement should be cross societal from individuals to families, small businesses to large multinationals, advisory groups to government and technical specialists.

Considering everything that Jim outlined, it is essential that the risks are mitigated. The report “The Malicious Use of Artificial Intelligence: Forecasting, Prevention and Mitigations” recommends:

- AI researchers should acknowledge how their work can be used maliciously

- Policy makers need to learn from technical experts about these threats

- AI world needs to learn from the security world how to best safeguard against threats

- Ethical frameworks need to be developed and followed

- More inclusive discussions across AI scientists, policy makers, ethicists, business and the general public

The Future

By 2036 there will be an accumulation of knowledge as well as a distribution of knowledge in service systems globally. As there is knowledge accumulation we need to ensure that service systems at all scales become more resilient This will lead to the capability of rapid rebuilding of service systems across scales. Key to this are T-shaped people who understand how rapid rebuilding works, so that knowledge has been chunked, modularised and put into networks that support the rapid rebuilding.

To prepare to get the benefits and avoid the risks, this is what Jim tells his students, to provoke their thinking about the cognitive era:

- 2015 – about 9 months to build a formative Q&A system – 40% accuracy;

- another 1-2 years and a team of 10-20 can get it to 90% accuracy by reducing the scope

- today’s systems can only answer questions if the answers are already existing in the text explicitly

- debater is an example of where we would like to get to in 5 years: https://www.youtube.com/watch?v=7g59PJxbGhY

- more about the ambitions at http://cognitive-science.info

- 2025: Watson will be able to rapidly ingest any textbooks and produce a Q&A system

- the Q&A system will rival C-grade (average) student performance on questions

- 2035 – as above, but rivals C-level (average) faculty performance on questions

- 2035 – approaching peta-scale of compute power costs for about $1000

- A peta-scale is good for narrow AI, an exa-scale (1000x peta-scale) is need for general AI

- an exa-scale is the equivalent compute of one person’s brain power (at 20W power)

- 2035 – nearly everyone has a cognitive mediator that knows them in many ways better than they know themselves

- memory of all health information, memory of everyone you have ever interacted with, executive assistant, personal coach, process and memory aid, etc.

- 2055 – nearly everyone has 100 cognitive assistants that “work for them”

- better management of your cognitive assistant workforce is a course taught at university

In 2015 we were at the beginning of the beginning of the cognitive era. In 2025 we will be middle of beginning, easy to generate average student level performance on questions in a textbook. In 2035, we will be at the end of beginning (1/1000 one brain power equivalent), easy to generate average faculty level performance on questions in textbook.

By 2055, roughly 2x 20-year generations in the future, the cognitive era will be in full force. Cell-phones will likely become body suits, with burst-mode super-strength and super-safety features:

- Suits – body suit cell phones

- Cognitive Mediators will read everything for us and relate the information to us, and what we know and our goals.

- Combined personal coach, executive assistant, personal research team.

The key is knowing which problem to work on next. See this video for the answer (energy, water, food, wellness): https://www.youtube.com/watch?v=YY7f1t9y9a0&index=10&list=WL

Resources

To prepare for the future of AI, Jim recommended several resources:

- IBMs Centre for Open Source Data and AI Technologies (CODAIT.org)

- GitHub, “…isn’t just for programmers. Everyone should have a GitHub account”(github.com) is like Wikipedia with code, that executes (code puts knowledge into action). In 10 years programming will be like chess, it will be solved!

- Kaggle, “…leader-boards for coding competitions, acquired by Google 2 years ago”

If this resonated, explore Ntegra’s Knowledge Hub for deeper strategy insights, market trends and pragmatic thought leadership.

5 Benefits A PMO Will Bring Your Organisation

What Is “Data Wrangling” And How Can It Help Business Users

How To Make Your Workplace Collaboration Succeed

Ntegra Israel Research Tour 2018 – A Summary

Why I Attend The Ntegra US Research Tour

Signals From Silicon Valley

Five Of Ntegra’s Recommended Data Management Principles

Data Management Trends For 2019